U.S. Threat Hunter Fights AI-Driven Election Manipulation by Foreign Foes

AI is being used by foreign enemies to rig U.S. elections, according to a top threat hunter.

In June, as top intelligence and national security officials got ready for the most important part of the election, a new employee of OpenAI was being sped around Washington to tell them about a new threat from abroad.

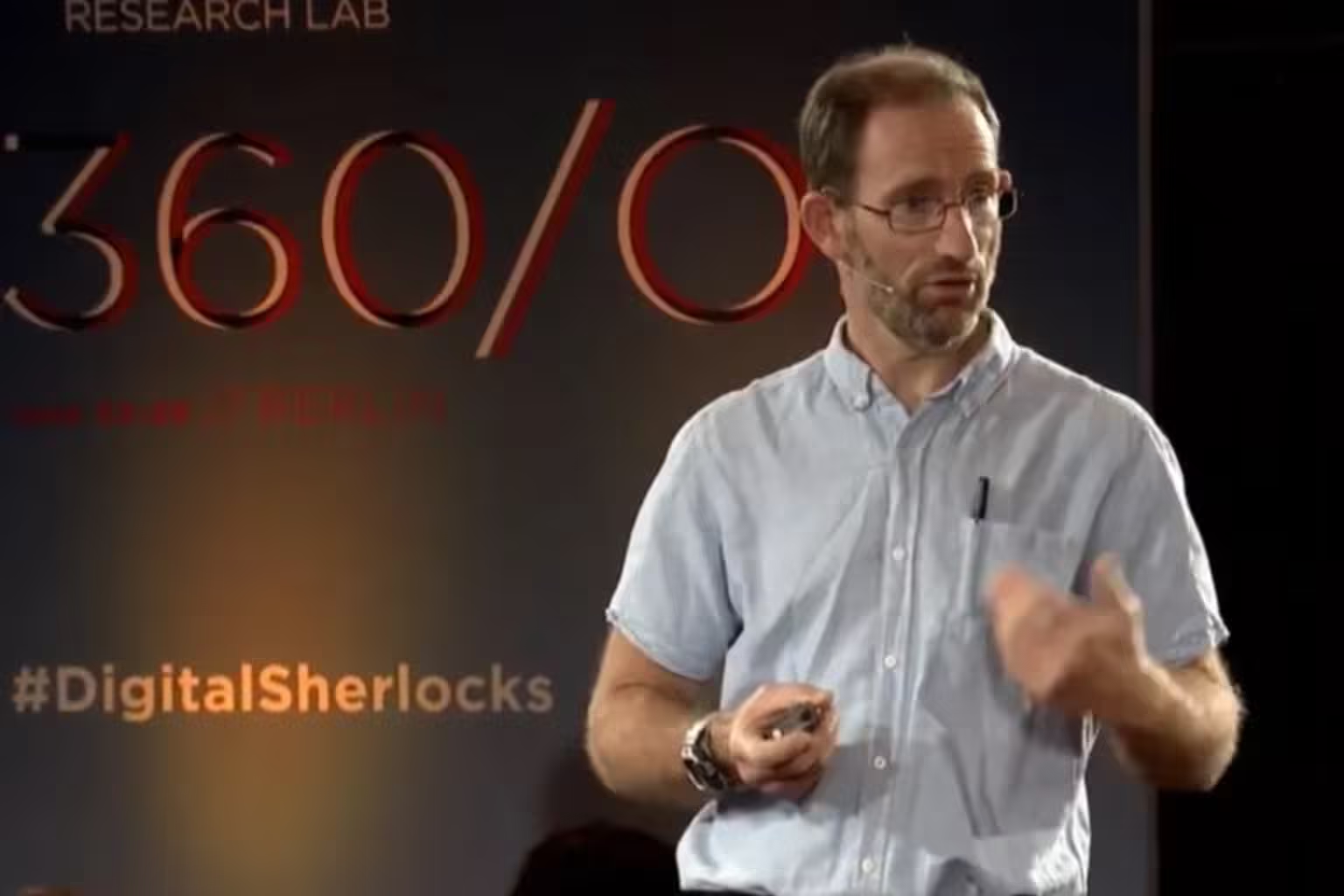

The well-known AI pioneer’s lead threat investigator, Ben Nimmo, found proof that Russia, China, and other countries were using its main product, ChatGPT, to make fake political posts on social media to try to change the conversation online. Nimmo was shocked to see that government officials had printed out his report and marked up and tabbed the most important parts of the operations. He had just started working at OpenAI in February.

That attention made it clear that Nimmo was at the front of the pack when it came to fighting the huge boost that AI can give to foreign enemies’ disinformation campaigns. Nimmo was one of the first researchers to find out how the Kremlin meddled in U.S. politics online in 2016. Now, tech companies, the government, and other experts are counting on him to find foreign enemies who are using OpenAI’s tools to cause chaos in the weeks before the November 5 election.

The Englishman, who is 52 years old, says that Russia and other foreign players are mostly just “experimenting” with AI so far, usually in campaigns that aren’t very good and don’t reach many U.S. voters. However, OpenAI and the U.S. government are ready for Russia, Iran, and other countries to get better at using AI. They think that the best way to stop this is to reveal and stop operations before they get going.

Katrina Mulligan, a former Pentagon official who went to the talks with Nimmo, said it’s important to build that “muscle memory” so that when bad guys make mistakes, it’s easy to spot them.

Nimmo released a report on Wednesday saying that OpenAI had stopped four separate operations that were aimed at elections around the world this year. One of these was an Iranian effort to make America more divided along partisan lines by posting comments and long-form articles on social media about U.S. politics, the conflict in Gaza, and Western policies towards Israel.

According to Nimmo’s report, OpenAI had found 20 operations and fake networks around the world this year. In one of them, an Iranian was using ChatGPT to improve bad software that was meant to take over Android devices. In a different case, a Russian company used ChatGPT to create fake news stories and its image creator, Dall-E, to create violent, cartoonish images of fighting in Ukraine in order to get more attention on social media.

Nimmo made it clear that none of those posts became popular. He did say that he was still very aware because the campaign wasn’t over.

Researchers say Nimmo’s studies for OpenAI are very important because other companies, especially X (formerly Twitter), are not doing enough to fight fake news. But some people in the same field as him are worried that OpenAI might not be pointing out how its products can make threats stronger during an election year.