AMD Aims to Compete with Nvidia by Boosting MI325X Chip Production

AMD will start making a lot of its MI325X AI chip in the fourth quarter, hoping to make it more competitive in a market dominated by Nvidia. There will be new MI350 chips with faster architecture and more memory in 2025.

AMD announced on Thursday that it would begin mass production of the MI325X, a new version of its AI chip, in the year’s fourth quarter. The company aims to enhance its AI chips in the Nvidia-dominated market. The company announced at an event in San Francisco that they will release the next generation of AMD MI350 chips in the second half of 2025. In addition to more memory, these chips will have a new architecture that the company says will make them much faster than the MI300X and MI250X chips that came before them.

Big tech companies like Microsoft and Meta Platforms want more AI processors than Nvidia and AMD can make. This means that the chipmakers can sell as much as they can make. Chip stocks have gone up a lot in the last two years because of this. AMD’s shares are up about 30% since their low point in early August. However, investors are interested in how these companies are enhancing their technology to determine if they can maintain this momentum.

On Thursday afternoon, AMD stock was down 3.3%, Nvidia stock was up 1.7%, and Intel stock was down 0.5%. Kinngai Chan, a research analyst at Summit Insights, stated that they have not yet announced any new customers. He added that the stock rose before the event because people anticipated “something new.”

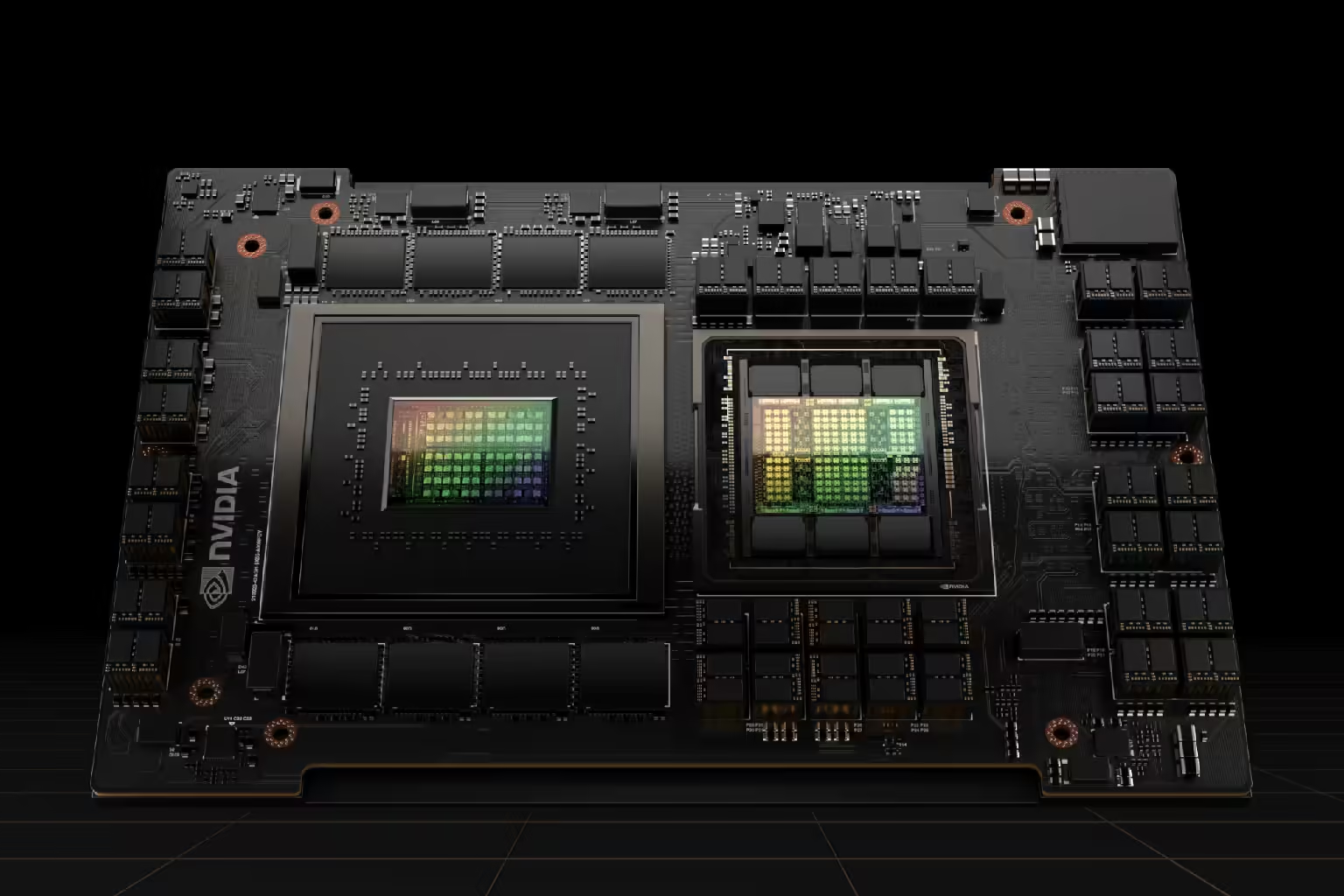

Companies like Super Micro Computer will start receiving the MI325X AI chip from the California company AMD in the first quarter of 2025.AMD’s design aims to challenge Nvidia’s Blackwell architecture.

AMD Boosts AI Chip Performance with New MI325X

The MI325X chip is based on the same architecture as AMD’s MI300X chip, which came out last year. The new chip incorporates a new type of memory that AMD claims will accelerate AI calculations. AMD also showed off several networking chips that make data move faster between chips and systems in data centers.

The company said that a new version of its server central processing unit (CPU) design was now available. The company designed one chip in the Turin family to continuously feed data to the graphics processing units (GPUs), thereby accelerating AI processing. At $14,813, the top-tier chip boasts nearly 200 processing cores. All of the processors in this line use the Zen 5 architecture, which makes advanced AI data processing up to 37% faster.

From $4 billion to $4.5 billion for the year, AMD raised its prediction for AI chips in July. The demand for AMD’s MI300X chips has skyrocketed due to the widespread use of generative AI products. Estimates from LSEG show that this year, AMD will make $12.83 billion from its data centers. Wall Street thinks that NVIDIA will make $110.36 billion from its data centers. Data centres can use their earnings to purchase the AI chips required for the creation and operation of AI apps.