AI Misinformation Surge: U.S. Voters at Risk of Manipulation

AI-powered “deepfake” videos attacking politicians have raised concerns about voter fraud in the upcoming U.S. presidential election. This has led tech giants to call for tighter controls on generative AI.

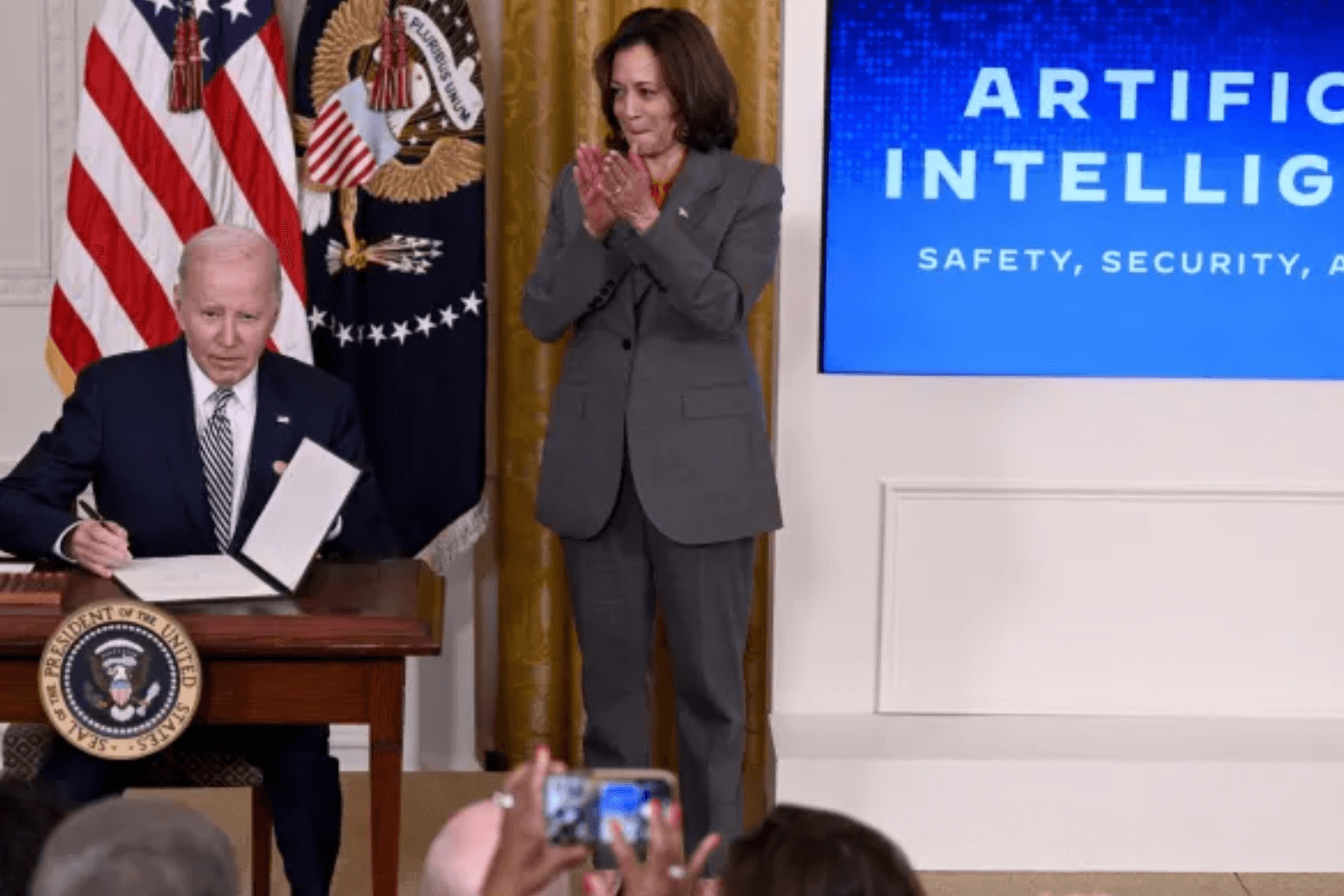

The rise of “deepfake” videos making fun of Kamala Harris, Joe Biden, and the arrest of Donald Trump has raised concerns about the AI-powered spread of false political information and its ability to sway voters as the race for president of the United States heats up.

Researchers are warning that tech-enabled fakery could be used to sway voters towards or away from candidates, or even to keep them from going to the polls at all, in November, which is being called by many to be America’s first AI election. This could make things even more tense in a world that is already very divided.

A recent wave of fake news has brought up calls for tech giants to tighten controls on generative AI before the election. Many of these companies have stopped moderating content on social media.

Elon Musk got a lot of bad feedback last week when he shared a deepfake video with his 192 million X followers that supposedly showed Vice President Harris, the likely Democratic candidate. It has an audio that sounds like Harris and says that President Joe Biden is crazy. The voice then says that Harris “doesn’t know the first thing about running the country.”

The only thing in the video that showed it was a spoof was a laughing emoji. Musk didn’t say that the movie was meant to be funny until much later. Researchers were worried that watchers might have got it wrong and thought Harris was making fun of herself and Biden.

The fact-checkers at AFP have revealed other AI hoaxes that caused concern. A hacked video that went around X last month seemed to show Biden swearing at his critics, including using anti-LGBTQ slurs, after he said he wouldn’t run for re-election and backed Harris for the Democratic nod.

The video was found to be from one of Biden’s talks, which was shown live on PBS and in which he spoke out against political violence after the July 13 attempt on Trump’s life.

PBS said the video that had been changed was a deepfake that used its brand to trick people. A picture that was shared on many platforms weeks earlier seemed to show police arresting Trump against his will after a New York jury found him guilty of lying about business records linked to a hush money payment to porn star Stormy Daniels.

Lucas Hansen showed AFP how one AI robot could change the outcome of an election by sending out a lot of fake tweets. Observers say that this kind of mass fraud could make people angry at the voting process.

A poll released last year by the media group Axios and the business intelligence company Morning Consult found that more than half of Americans think AI-enabled lies will have an effect on who wins the 2024 election. The poll found that about one-third of Americans said they would not believe the results as much because of AI.

In April, more than 200 advocacy groups sent a letter to tech CEOs asking them to do more quickly to strengthen the fight against AI lies. They suggested doing things like banning the use of deepfakes in political ads and using algorithms to support real election content.